How I explain the essence of LLMs to non-technical folks

by having them try to make sense of Chinese writing

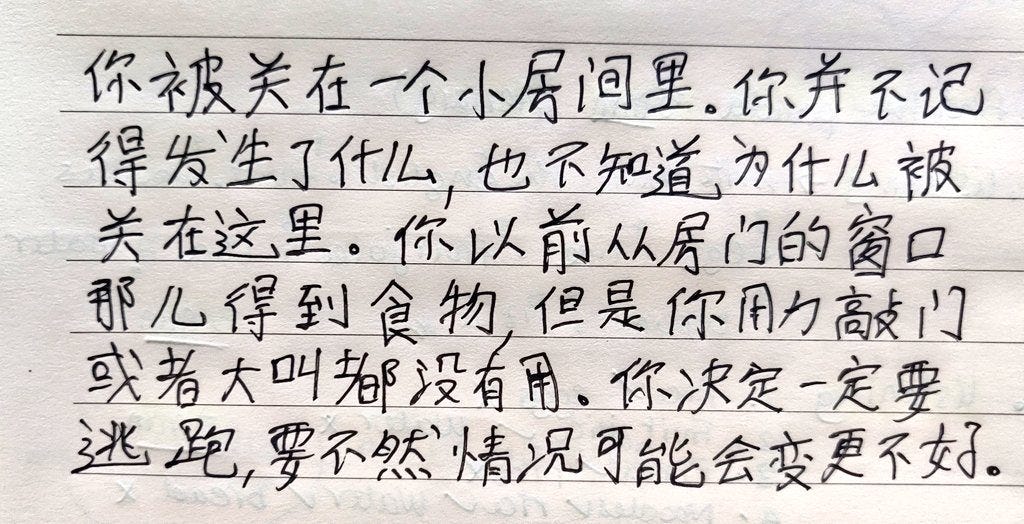

Suppose I give you this piece of Chinese handwriting and ask you to figure out what it means.

What are you gonna do?

Note: given that you’re Chinese-naive of course

Because Chinese is vastly different from any other language you might know (English, European languages, etc.), you won’t be able to “cheat” by transferring your existing knowledge onto this task — like you could do with Spanish if you know English and Russian, for instance.

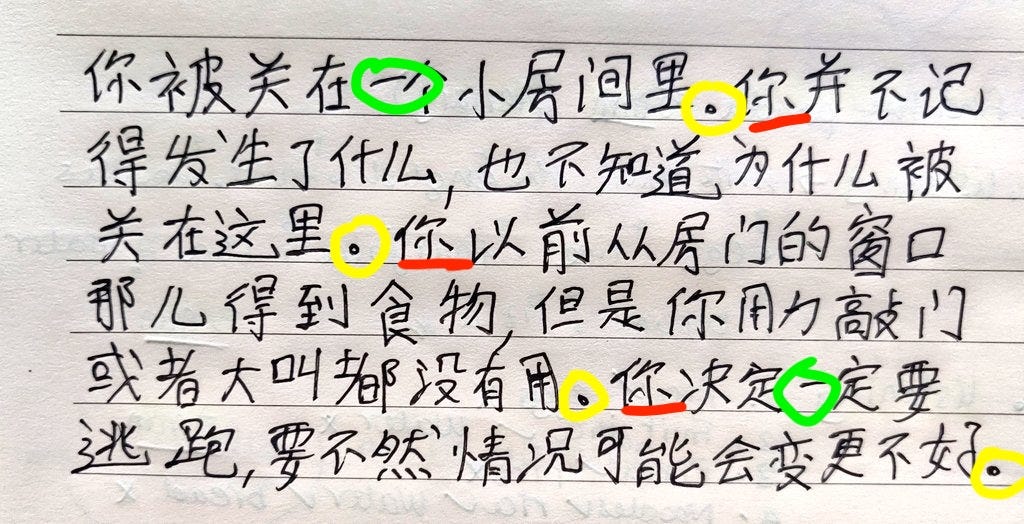

So the only real way forward will be to try and find some patterns. Perhaps at first you’ll notice that the dot symbol “.” appears several times in the text. You’ll find that interesting. Then, you’ll see that the symbol that follows the dot is also the same on 3 occasions. You’ll take a mental note of that — “okay, the dot is often followed by that weird curly symbol.” Then you’ll likely notice the dashes. The commas. And so on and so forth.

Now, suppose I give you trillions of documents like this one. Yes, trillions. And suppose that you’ve a much faster visual system and a lot more brain power; you can read all of them in a few months and hold all those patterns in your memory; not perfectly like a database, but loosely, kinda like humans do.

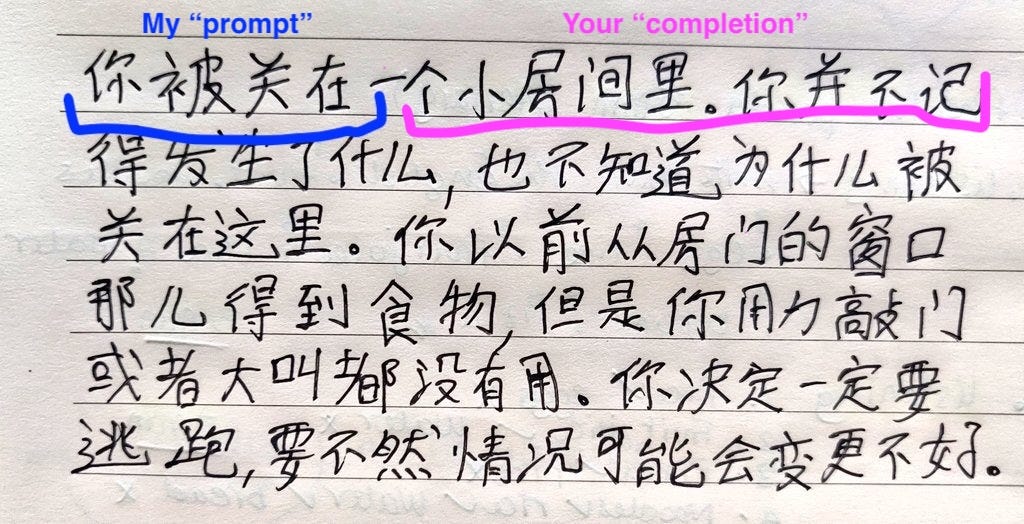

After that “training” is done, if I give you a sequence of characters like the first few on this image, and ask you to continue writing, you’ll be able to produce meaningful Chinese writing WITHOUT HAVING ANY CLUE WHATSOEVER WHAT IT SAYS OR MEANS.

You’ll be able to write blog posts, memos, letters, and much more. You’ll be able to do math, perhaps even calculus — if your memory is decent. You could write poetry, novels, ANYTHING really, as long as there was a lot of instances of those symbols appearing in the training set that I gave you.

In other words, you’ll be very skillful and could perform many roles: from text editor to freelance writer to mathematician to even more “informational” / idea-based ones like coach or doctor. STILL WITHOUT KNOWING WHAT YOU’RE REALLY SAYING.

That’s the fascinating and incredibly beautiful thing about language models. If your training data is legit AND your algo is decent AND you’ve got tons of compute for your training runs, you will end up with a model that is able to do all those things without really understanding what it’s doing — just like you would be able to say meaningful things in Chinese without knowing what you’re saying.

Does that make you intelligent? Depends on how you define it of course. Yes, you can do many things. But there’s also something you can’t do; something that humans seem to do very easily. If I give you a sequence of characters that was NOT in the training data — in other words, a novel problem — you will struggle, even if the problem appears to be relatively simple. (Check out this Dwarkesh pod with Francois Chollet for more on this, and ARC AGI prize.)

It makes you capable, that’s for sure. And that’s exactly what LLMs are.